Agentic Software Development: A Paradigm Shift in Cognitive Design

Introduction

The evolution of software design has seen significant shifts, each bringing about new paradigms that aim to reduce cognitive load on users and enhance usability. Traditionally, software development focused on creating application-specific cognitive designs. These designs required users to learn specific ways of interacting with each application, leading to a significant cognitive burden. As natural language interfaces emerged, this burden was somewhat alleviated, but the complexity of building these interfaces remained a challenge. The advent of large language models (LLMs) has revolutionized this space, making understanding and generating natural language more accessible. This breakthrough has given rise to a new approach in software design: agentic thinking.

The Traditional Cognitive Design

In the traditional approach to software development, each application came with its own set of rules, commands, and interaction patterns. Users needed to invest time and effort into learning these specifics, creating a significant cognitive load. This approach, while effective in the early days of computing, became increasingly cumbersome as the number of applications grew. Users had to constantly switch cognitive gears, leading to frustration and decreased productivity.

Significant Shifts to Decrease Software-Induced Cognitive Load on Users

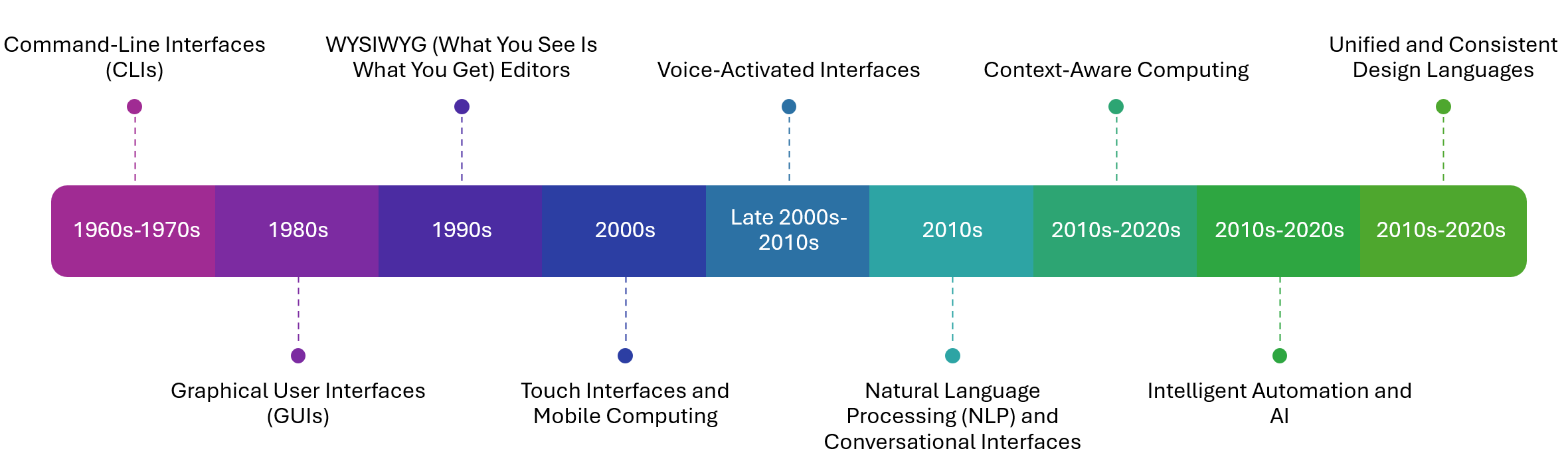

Over the decades, the evolution of software design has been marked by several significant shifts aimed at reducing the cognitive load on users. These shifts have focused on making software more intuitive, user-friendly, and accessible. Here, we explore some of the key developments that have driven this progress.

1. Graphical User Interfaces (GUIs)

Before GUIs

Early software applications were predominantly command-line interfaces (CLIs), requiring users to memorize and input specific commands. This approach was efficient for expert users but posed a significant cognitive burden for the average user.

Introduction of GUIs

The introduction of graphical user interfaces (GUIs) in the 1980s marked a revolutionary shift. GUIs replaced text-based commands with visual elements like icons, buttons, and menus. This change significantly reduced the cognitive load by providing intuitive and visually driven interactions. Users could now navigate software using a mouse and visual cues, making computing more accessible to the general public.

2. WYSIWYG (What You See Is What You Get) Editors

Early Document Editing

Before WYSIWYG editors, document creation and editing involved learning complex markup languages or command sequences, such as those used in LaTeX or early word processors.

Impact of WYSIWYG

WYSIWYG editors allowed users to see a real-time representation of their final document as they edited it. This approach bridged the gap between the creation process and the final output, making tasks like word processing, desktop publishing, and web design far more intuitive and less cognitively demanding.

3. Touch Interfaces and Mobile Computing

Desktop-Centric Design

For many years, software was designed with desktop computers and their peripherals (mouse, keyboard) in mind. This design paradigm did not translate well to mobile devices.

Emergence of Touch Interfaces

The rise of smartphones and tablets introduced touch interfaces, which further simplified user interactions. Swiping, tapping, and pinching are more natural and intuitive than using a mouse and keyboard. Mobile operating systems, such as iOS and Android, are designed around these interactions, reducing the learning curve and making technology more accessible to a wider audience.

4. Voice-Activated Interfaces

Early Voice Recognition

Early voice recognition systems were often unreliable and required extensive training to recognize specific commands.

Modern Voice Assistants

Advancements in speech recognition technology led to the development of reliable voice-activated interfaces, exemplified by virtual assistants like Siri, Alexa, and Google Assistant. These systems allow users to interact with software using natural language, significantly lowering the cognitive barrier. Users can perform tasks by speaking commands, which is especially beneficial for those with disabilities or those engaged in hands-free activities.

5. Natural Language Processing (NLP) and Conversational Interfaces

Early NLP Efforts

Initial attempts at NLP were rudimentary and often resulted in misinterpretations of user input.

Advanced NLP and Chatbots

Recent advancements in NLP have enabled the creation of sophisticated conversational interfaces. Chatbots and virtual assistants can understand and respond to user queries in natural language, facilitating more intuitive interactions. These interfaces can handle complex queries, context, and even sentiment, providing a more seamless and human-like user experience.

6. Context-Aware Computing

Static User Interfaces

Traditional user interfaces were static, offering the same experience regardless of the context or user.

Adaptive and Context-Aware Systems

Modern software can adapt to the user's context, such as location, time of day, and usage patterns. Context-aware systems personalize the user experience, making interactions more relevant and reducing the need for users to manually adjust settings or navigate through irrelevant options.

7. Intelligent Automation and AI

Manual Task Execution

Users traditionally had to perform repetitive tasks manually, which was time-consuming and cognitively taxing.

Integration of AI

The integration of artificial intelligence (AI) and machine learning (ML) into software has led to intelligent automation. AI can predict user needs, automate repetitive tasks, and provide proactive suggestions. For instance, email clients can prioritize messages, and productivity software can suggest actions based on user behavior. This reduces the cognitive load by allowing users to focus on more critical tasks.

8. Unified and Consistent Design Languages

Fragmented Design Approaches

Previously, software from different developers often had vastly different interfaces, causing confusion and a steeper learning curve.

Design Systems and Frameworks

The adoption of unified design languages and frameworks, such as Google's Material Design and Apple's Human Interface Guidelines, has led to more consistent user experiences across applications. Consistent design principles help users transfer their knowledge from one application to another, reducing the cognitive load associated with learning new interfaces.

The Transition to Natural Language Interfaces

With the development of natural language interfaces, the cognitive demands on users began to decrease. These interfaces allowed users to interact with applications in a more intuitive and human-like manner. However, building such interfaces was not straightforward. It required significant expertise in natural language processing (NLP) and a deep understanding of user intents. Despite these challenges, the potential benefits were clear: more accessible and user-friendly applications.

The Rise of Large Language Models

The introduction of large language models, such as GPT-3 and beyond, marked a turning point in natural language understanding and generation. These models, trained on vast amounts of text data, can understand and generate human-like text with remarkable accuracy. This development has made it easier to build natural language interfaces, paving the way for a new era of software design.

Agentic Thinking: A New Paradigm

Agentic thinking represents a fundamental shift in how we design and develop software. In this approach, software is driven by the user's intent rather than a predefined set of commands. Applications are designed as agents that can understand and respond to user intents in natural language. This shift offers several advantages:

- Reduced Cognitive Load: Users no longer need to learn the specifics of each application. Instead, they can interact with the software in a way that feels natural and intuitive.

- Increased Accessibility: Natural language interfaces make software more accessible to a broader audience, including those who may not be tech-savvy.

- Enhanced Flexibility: Applications can adapt to a wide range of user intents and contexts, providing a more personalized experience.

- Interoperability: Agentic applications can communicate with each other in natural language, enabling seamless integration and collaboration.

Opportunities in Agentic Software Development

The agentic approach to software development opens up numerous opportunities:

- Personalized User Experiences: Applications can adapt to individual user preferences and behaviors, offering a more tailored experience.

- Improved Productivity: By reducing the cognitive load on users, agentic applications can enhance productivity and efficiency.

- Greater Inclusivity: Natural language interfaces can make technology more inclusive, enabling a wider range of users to interact with software.

- Innovative Applications: The flexibility of agentic applications can lead to the development of innovative solutions that were previously not possible.

Challenges in Agentic Software Development

Despite its promise, agentic software development also presents several challenges:

- Complexity in Intent Understanding: Accurately understanding user intents in natural language can be complex, especially when dealing with ambiguous or vague inputs.

- Contextual Awareness: Agents need to maintain contextual awareness to provide relevant responses, which can be challenging in dynamic environments.

- Security and Privacy: Handling natural language data raises concerns about security and privacy, especially when dealing with sensitive information.

- Integration with Legacy Systems: Many existing systems are not designed to support natural language interactions, posing integration challenges.

Conclusion

The agentic way of developing software represents a significant shift in cognitive design, driven by the user's intent rather than predefined commands. By leveraging the capabilities of large language models, we can create applications that are more intuitive, accessible, and flexible. While there are challenges to overcome, the potential benefits make this an exciting and promising direction for the future of software development. As we continue to explore and refine agentic thinking, we can look forward to a new era of user-centric, intelligent applications that transform how we interact with technology.